Intro

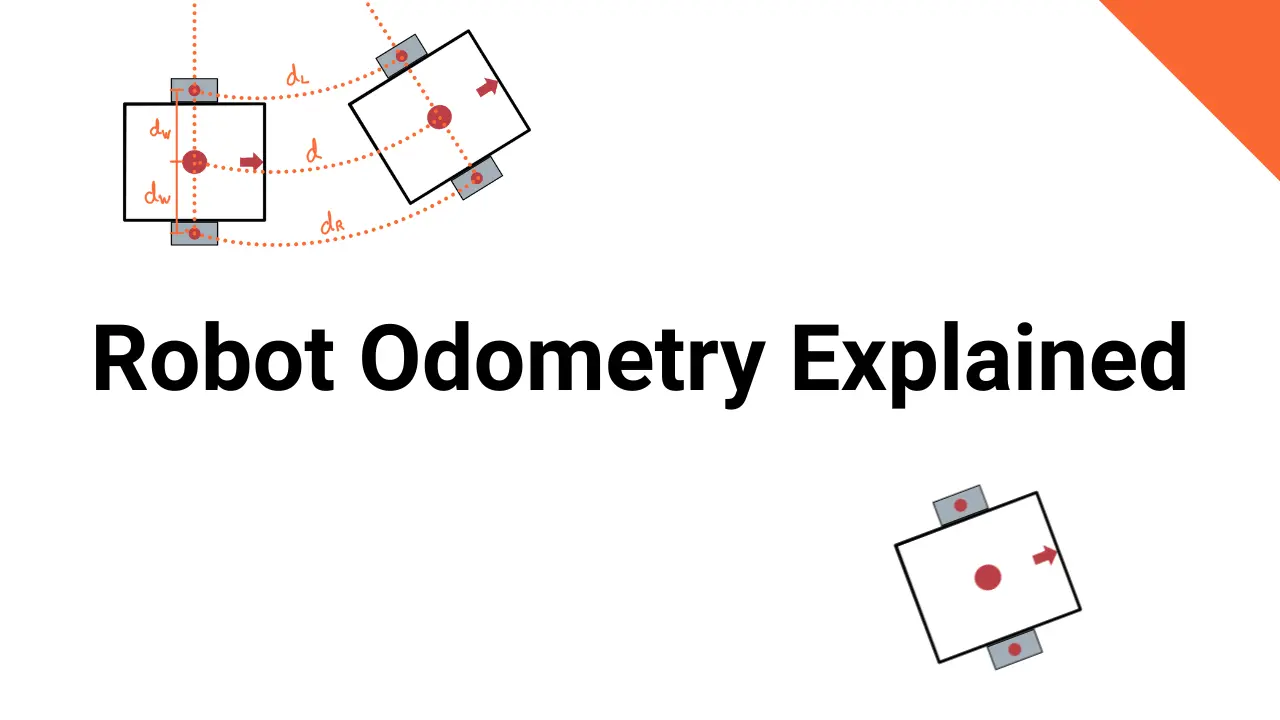

Odometry is how robots use data from motion sensors to estimate change in position and orientation (AKA robot-pose) by integrating its own motion over time 1

In traditional wheel-based odometry, the robot uses sensors (like encoders) to track how much each wheel rotates, allowing it to calculate how far it has moved and whether it has turned. But odometry isn’t limited to wheeled robots. Visual odometry, for example, uses cameras to observe how the environment appears to move as the robot moves, estimating displacement by analyzing the motion of visual features. Similarly, inertial odometry relies on data from accelerometers and gyroscopes (IMUs) to estimate movement and rotation based on forces and angular rates.

Modern robots often use sensor fusion techniques that combine different forms of odometry—such as visual-inertial or wheel-inertial odometry—to improve accuracy and reliability. Each method has its own strengths and weaknesses: wheel odometry is simple but suffers from drift due to slippage, while visual odometry can struggle in low-light or featureless environments. Inertial sensors provide fast updates but accumulate error quickly if used alone.

While odometry alone can’t provide perfect localization over long periods (due to accumulating error), it plays a critical role in robot navigation, especially for short-term motion tracking, path following, and as a foundation for more advanced techniques like SLAM (Simultaneous Localization and Mapping) or GPS-augmented systems in outdoor environments.

However, while odometry can provide useful short-term estimates, it is inherently prone to cumulative error. Small inaccuracies in wheel measurements, slippage on surfaces, or uneven terrain can cause the robot’s estimated position to drift over time. This is why odometry is often used in combination with other sensing methods, such as visual or inertial sensors, to improve accuracy and correct for drift.